Table of Contents

1. Introduction to AI Stocks

2. Why These 3 Small Stocks Could Win Big

3. Micron Technology

4. Arista Networks

5. Vertiv Holdings

6. Key Takeaways

The key to finding the best stocks to buy now is understanding a company's products, not just their profits. Huge growth happens when a company has the perfect product for a new or expanding market. If you invested $10,000 into Nvidia just 5 years ago, you'd have over $140,000 today. And if you invested that money into Palantir, you'd have almost $200,000 right now. But the formula for finding winners changes when interest rates start coming down.

So in this video, I'll highlight 3 smaller AI stocks that could win big as the Federal Reserve keeps cutting rates. Your time is valuable, so let's get right into it. First things first, I'm not here to waste your time. So here's what I'll be covering up front.

Why these 3 small stocks could win big as interest rates start to fall, Micron Technology which makes high bandwidth memory for Nvidia's Blackwell GPUs, Arista Networks which makes high performance Ethernet switches to connect those GPUs, and Vertiv Holdings, which makes the data center systems powering and cooling them. That way we're holding some of the key players across the entire AI data center market, regardless of which chip company or hyperscaler actually ends up on top.

That's a great way to get rich without getting lucky. So let's start with why I'm talking about these three companies specifically. In my most recent video, I walked through how interest rates affect the stock market, which stocks they affect the most, and exactly what to look for as rates start to fall.

Long story short, lower interest rates means people and businesses can borrow more money, which means they spend more money, which eventually turns into more revenue and earnings for the companies that we invest in. At the same time, yields go down for bonds and bank accounts, which makes stocks look more attractive, so investors are willing to buy stocks at higher price-to-earnings and price-to-sales ratios.

So, when the Federal Reserve cuts rates, they're really giving the stock market a double boost, higher earnings for companies and higher price to earnings ratios on their stocks but not all stocks are created equal when we looked at how rate cuts affected the s p 500's value index versus its growth index we saw that growth stocks outperform value stocks by almost double one month after three months after six months after and one year after the first rate cut that's because value stocks are priced more around their value today like their current revenues their current free cash flows or the current assets on their balance sheets.

But growth stocks are priced around their growth and the revenues, free cash flows, and future assets that that growth implies. That means when the Fed cuts interest rates, growth stocks benefit way more because they can borrow more money to make big investments into hiring new employees, buying new equipment, and making new products to achieve that growth in the first place. So, putting it all together, every winning stock should have the following things in common.

First, they obviously need to be in markets where spending is already growing fast since lower interest rates will just add fuel to that fire the global artificial intelligence market is expected to almost 19x in size over the next nine years which is a compound annual growth rate of over 38 through 2034.

that's more than triple the average annual growth rate of the s p 500 so ai stocks definitely check this box second they have to have a deep moat so they can't be easily outcompeted or replaced companies with patents proprietary chips software or networking technology can win more business and protect their margins as ai spending continues to expand third and this is a big one these companies should sell something that hyperscalers like microsoft amazon google and meta platforms want a lot of and want it right now we not looking for the companies spending the money we looking for the companies they spending it with That why all my videos focus on companies like Nvidia AMD TSMC Broadcom Palantir, CrowdStrike, and ARM.

Companies with hardware and software platforms that many huge businesses pay to build on top of. That's also how I picked the three stocks in this video. They're just a little smaller and a little less talked about, making them great additions to the bigger, safer stocks I cover when interest rates start to fall. Keep in mind that smaller stocks tend to be more risky. So think about your overall portfolio and make sure that you know what you're holding.

Seriously, Warren Buffett became one of the richest men in the world by staying inside his circle of competence and investing in businesses he understands. And right now, the most important thing for investors to understand is AI.

And they're giving the first 1,000 people who sign up with my link a free seat. Whether you work in tech or sales, marketing or HR, you'll learn 10 of the most powerful AI tools like Claude and Make.com, best practices for prompt engineering, how to analyze data, create tools and automate workflows without coding, and even build your own AI agents. You'll also get free lifetime access to Outskill's exclusive paid AI community, to keep growing alongside over 5,000 other AI professionals.

No wonder over 10 million people from 40 different countries have already participated, and slots for this one are filling up faster than ever. This is a great way to level up your AI knowledge, get a serious competitive advantage, and understand the science behind the stocks. So make sure to register for your free seat with my link below today. Alright, now that you have the context on why I picked these stocks, let's talk about the stocks themselves. Starting with Micron.

Micron technology makes memory for the entire computing industry, including solid-state drives, RAM modules, and flash memory for data centers, for PCs, smartphones, and even cars. They have a 25% share of the global memory chip market, only putting them behind SK Hynix and Samsung in terms of market share. But don't let this image fool you. First, Micron is the only US company out of these three.

SK Hynix and Samsung are both South Korean companies, which means they could face tariffs and supply chain issues that Micron might avoid. Second, 25% is still a massive share of any market. Microsoft Azure and Google Cloud are two of the most successful cloud infrastructure businesses on the planet, and they have a smaller share of their markets than Micron does of the memory market.

And third, the market for AI memory chips is expected to 9x in size over the next 9 years, which would be a compound annual growth rate of over 27% through 2034. The memory market is roughly split between volatile memory, like the HBM chips that get attached to NVIDIA's GPUs, and non-volatile memory, like solid-state drives and flash memory, where AI datasets, model weights, and training results get stored. Let's talk about high bandwidth memory since that's what AI data centers need the most.

High bandwidth memory is designed to move huge volumes of data at extremely high speeds by stacking memory chips and connecting them to a shared hub. HBM is much faster and much more power efficient than other types of memory, since it lowers the physical distance that data needs to travel. Modern AI models handle gigantic data sets and billions of parameters that need to be fed into an AI processor as fast as possible, and traditional memory would bottleneck that entire process.

High bandwidth memory is the reason that AI models can be trained so fast have such large parameter counts and provide powerful inference results without waiting for hours on end HBM is Micron single fastest growing product category in 2025, and they have much more demand than supply.

Micron's most advanced memory products are sold out for months in advance, and according to their latest earnings call, they expect demand to double from $18 billion last year to $35 billion this year and continue growing into 2026 and beyond. Industry analysts expect high bandwidth memory to be the main growth driver for Micron going forward, with HBM revenues projected to blow past $6 billion per year and hit a $10 billion annual run rate in 2026.

Like I said at the start of this video, huge growth happens when a company has the perfect product for a new or expanding market. For Micron, that's high bandwidth memory and we'll see just how big they'll grow now that interest rates are going down and companies can spend even more on AI infrastructure. That's also why the second company on my list is Arista Networks, ticker symbol A-N-E-T.

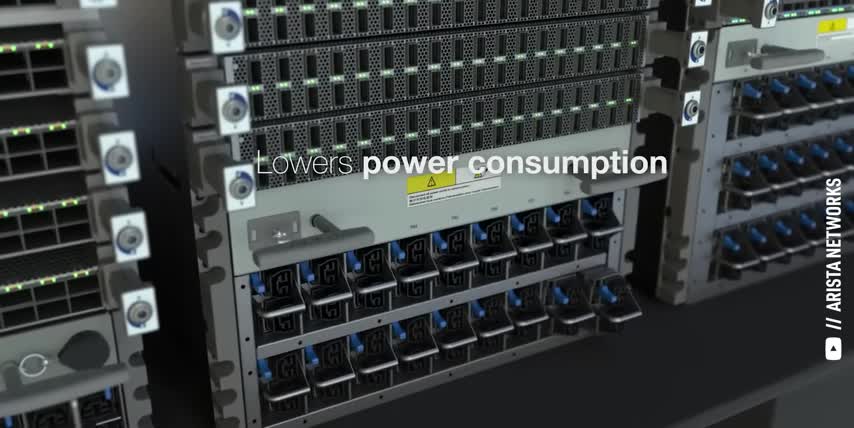

Arista makes its money by designing specialized Ethernet switches, network control software, and management tools that let their customers build huge, reliable, and fast networks at scale for a wide variety of applications, like cloud computing, video streaming, and financial trading and more.

But Arista's key role in AI comes from two major factors their network performance and their open standards training and running ai models at scale involves breaking up huge amounts of data moving that data between thousands of chips and then putting those partial products back together to form an output that means the whole system is only as fast as the slowest part of the network and any slowdowns or lost data means wasted compute time higher costs and lower reliability arista's switches and software are engineered for extremely high speeds and reliability with very low latency.

And because they use open standards, their customers don't get locked into a single vendor like they do with Nvidia's InfiniBand. Arista has a single, highly programmable operating system called EOS that runs on all of their networking equipment that makes managing and automating their systems simple and scalable.

They also enable things like lossless networking, special load balancing, and monitoring network health so that data center operators can find and solve their most expensive bottlenecks when they're running huge AI workloads.

Having an open and programmable standard for Ethernet makes Arista a top networking supplier for most data centers on Earth, since well over 90% of the world's overall data center infrastructure currently runs on Ethernet today, and the companies running them want to get the most bang for their buck on their infrastructure. Don't forget that most of today's workloads still have nothing to do with AI.

another thing that i like about arista networks is they work closely with broadcom arista's switches and software are built around broadcom's tomahawk switch chips which is how they're able to stay ahead of the growing networking needs for ai in fact the global ai data center market is expected to almost 9x in size over the next nine years which would be a compound annual growth rate of 27 through 2034 and that's before the federal reserve started lowering interest rates, which means AI data center spend could ramp up even faster than that from here.

Case in point, Wall Street analysts expect Arista's AI networking revenue to grow by 70% year over year, as companies like Microsoft, Meta Platforms, and Oracle spend billions of dollars to connect their AI clusters with Arista's switches and software.

But all of these AI clusters are useless if you can't power them and keep them cool, which is why the third stock on my list is convertive holdings ticker symbol vrt and if you feel i earned it so far consider hitting the like button and subscribing to the channel That really helps me out and it lets me know to make more content like this Thanks, and with that out of the way, let's talk about powering and cooling these AI clusters.

It turns out that power accounts for 60-70% of a data center's total operating costs. That's why power efficiency in chips and AI infrastructure is so important. Cooling typically represents 30-40% of a data center's total power consumption, which means it can be anywhere from 18-28% of a data center's total costs. So, power and cooling make a huge impact on the costs of AI.

And one thing a lot of investors still don't realize is that around 80-90% of all data center server racks are still air-cooled today. But industry estimates suggest that up to 80% of that will eventually become direct-to-chip liquid cooling, which is up to 3,000 times more efficient than air cooling.

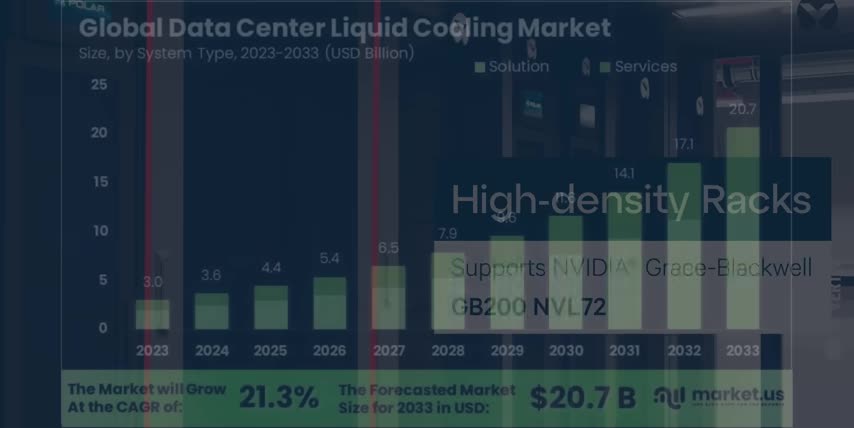

Even most AI clusters are still running their NVIDIA H100s at low enough power to be air-cooled because liquid cooling obviously comes with some serious infrastructure changes, and that means extra downtime, extra costs, and other extra risks. As a result, the data center liquid cooling market is expected to almost 5x in size over the next 8 years, which would be a compound annual growth rate of 21% through 2033.

And if you watched my recent videos, you know that NVIDIA's Blackwell and Blackwell Ultra systems straight up require directed chip liquid cooling, which means any data center that wants to use them needs to transition those racks to liquid cooling first. Directed chip liquid cooling is where a heat conductive copper plate is physically mounted to the chip. That plate is then connected to two pipes.

One pipe brings in cool water to absorb the chip's heat and the other pipe moves the hot water away. And since Nvidia is already shipping their Blackwell systems, I think this transition to liquid cooling will be much bigger and happen much faster than most analysts expect. Well, Vertiv Holdings makes power and thermal management systems for data centers.

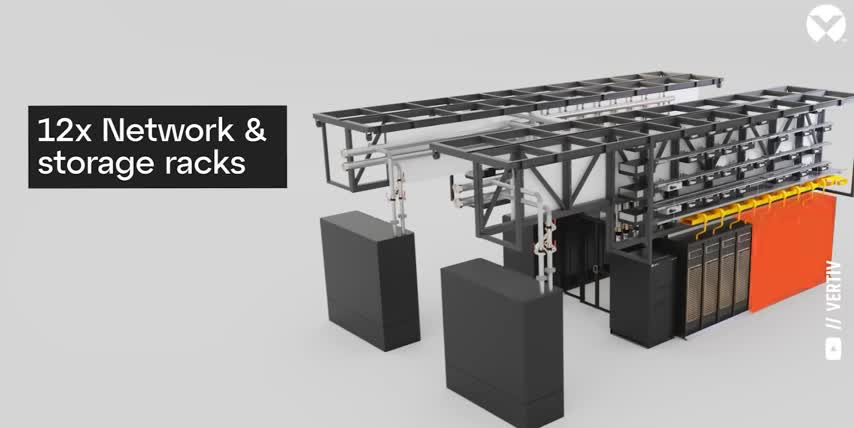

For example, their Liebert liquid cooling systems focus on high-density server deployments like the massive GPU clusters for AI training and inference. Vertiv's cooling systems are modular and can be scaled up to 600 kW of cooling per unit, which means data centers can add liquid cooling to exactly 5 120 kW blackwell racks at a time, or they can quickly retrofit their existing facilities to support them without making any other major infrastructure overhauls.

That's why Vertiv supplies critical cooling solutions to all three major hyperscalers, Amazon Web Services, Google Cloud, and Microsoft Azure. Vertiv also supplies them with core power systems, like their Lieber EXL, which is a high-capacity, uninterruptible power supply designed specifically for hyperscale and cloud facilities, because it supplies large amounts of energy at very high efficiencies. So, there you have it.

Three smaller AI stocks that could win big as the Federal Reserve keeps cutting rates, because they focus on the high bandwidth memory, the high-speed networking, and the high-efficiency power and cooling that every AI data center needs to stay competitive.

Key Takeaways

And as long as there's strong demand for NVIDIA's GPUs, Broadcom's ASICs and networking, or any other AI chips, all three of these companies should keep growing faster than expected, especially as interest rates keep coming down, making them all a great way to get rich without getting lucky. Key Takeaways:

- Invest in companies with a deep moat, such as patents, proprietary chips, software, or networking technology.

- Look for companies that sell products or services that hyperscalers like Microsoft, Amazon, Google, and Meta Platforms want and need.

- Consider investing in smaller, lesser-known stocks that have the potential for huge growth.

- Keep an eye on interest rates, as lower rates can boost the stock market and benefit growth stocks.

- Understand the importance of AI and its impact on various industries and markets.

And if you want to see what else I'm investing in to get rich without getting lucky, check out this video next. Either way, thanks for watching and until next time, this is Ticker Symbol You.

My name is Alex, reminding you that the best investment you can make is in you from TickerSymbol: YOU.

Checkout our YouTube Channel

Get the latest videos and industry deep dives as we check out the science behind the stocks.