I was wrong about AMD. AMD and OpenAI just announced one of the biggest partnerships of the entire AI era, but they also just showed us where every single AI stock is headed next. So in this episode, I'll break down everything you need to know about this massive partnership and what it means for AMD, Nvidia, and every other AI stock that we invest in. Your time is valuable, so let's get right into it.

First things first, I'm not here to hold you hostage, So here's what I'll be covering up front. The huge new partnership between AMD and OpenAI, what this deal means for AMD's data center business, how this deal is different from OpenAI's $100 billion deal with NVIDIA, and of course, what all this means for every single AI stock as a result. But let's start with the details of the deal itself.

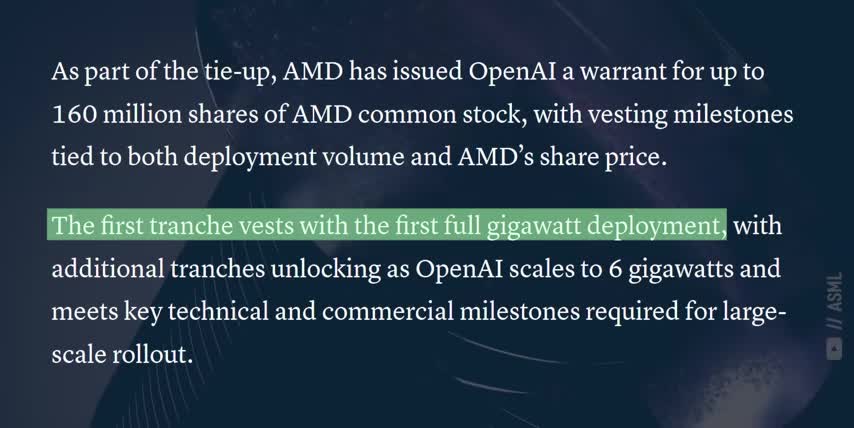

Earlier this week, OpenAI and AMD announced a plan to deploy 6 gigawatts of AMD's GPUs over the next few years. As part of the agreement, OpenAI would get warrants to buy up to 160 million shares of AMD stock for just one cent apiece, and they vest as specific milestones get reached. For example, the first block of shares unlock when the first gigawatt of GPUs get deployed, while other blocks of shares are tied to AMD's stock hitting certain prices. Let's unpack exactly what that means.

Here's a table I made of AMD's data center GPUs, and their TDPs, or Thermal Design Power, which really depends on what workloads they're running and whether they're air or liquid cooled. OpenAI wants to deploy 6 gigawatts of AMD in sync GPUs across multiple generations. If we assume they'll all be liquid cooled, we're really talking about something like 4.3 million GPUs, depending on the exact mix.

AMD's MI350X accelerators cost around $25,000 apiece, and AMD's data center revenues came in at $3.2 billion last quarter. About half of that revenue comes from their epic line of data center CPUs, so AMD really makes around $1.6 billion in accelerator revenues each quarter. Divide that number by 25,000, which means AMD sold about 64,000 GPUs last quarter.

Keep in mind that this number is probably a little high, since some of their data center revenues also come from FPGAs after their Xilinx acquisition, data processing units after their Pensando acquisition, and so on. So, let's just say that AMD currently sells roughly 200,000 data center GPUs per year. That means this OpenAI deal represents over 20 years worth of AMD's annual data center GPU shipments.

Even just the first phase, where AMD is scheduled to supply 1 gigawatt of GPUs starting in the second half of next year, still represents around a million GPUs, which is 5 times the GPUs AMD currently sells each year. AMD generated roughly $1.6 billion of AI accelerator revenues in Quarter 2, which would be around a $7 or $8 billion annual run rate after accounting for their growth.

That means this partnership will more than double AMD's entire data center GPU business and grow their total revenues by about an extra 40%, depending on which Instinct accelerators they actually supply and at what costs.

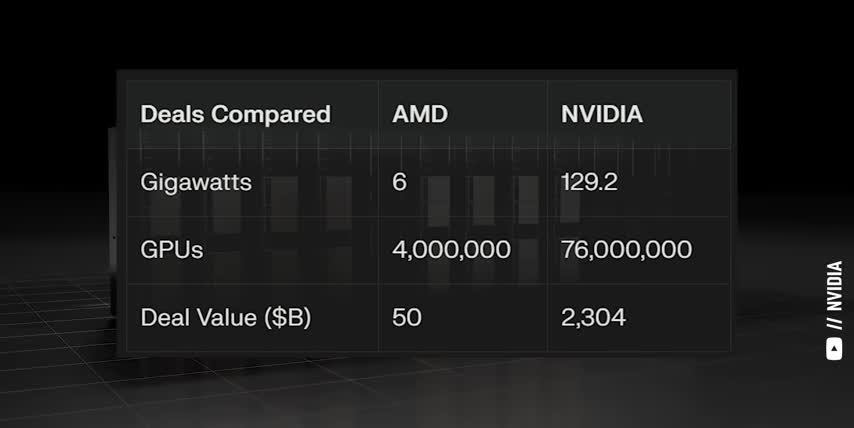

To understand just how mind-blowingly enormous this deal is for AMD, let me show you what What would happen if Nvidia got a deal this big instead, accounting for their size difference? If Nvidia got a deal for 20 years worth of their data center GPU sales, it would be a trillion deal to supply roughly 70 million GPUs That would be 120 gigawatts of compute which is as much as every existing data center on earth today combined That how big this deal is for AMD relative to their size.

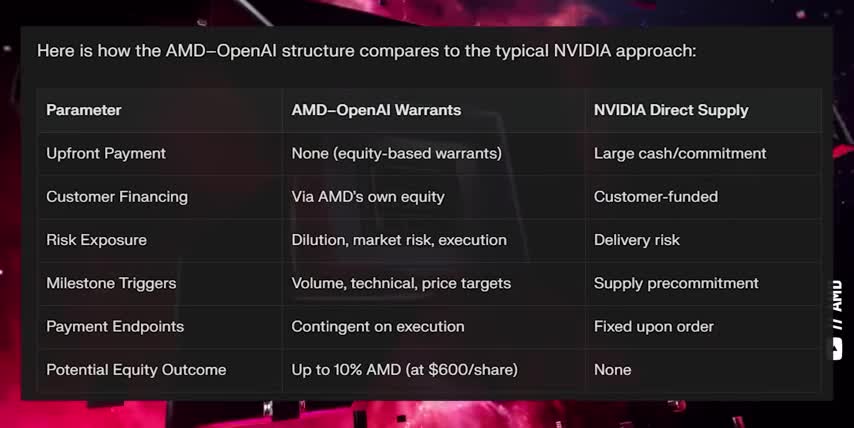

But the other part of this deal is even crazier, especially for AMD‘s investors. The big mistake that most people are making is thinking that OpenAI is buying 10% of AMD. That's not what's happening here. AMD is giving OpenAI warrants to buy shares of their stock for one penny apiece. That means AMD is financing OpenAI with equity based on OpenAI hitting certain infrastructure deployment milestones, not the other way around.

Which is why we can compare this deal directly to Nvidia's deal with OpenAI in the first place. The full 160 million shares of AMD, Vest only if OpenAI successfully deploys all 4.3 million or so AMD GPUs. By the way, 160 million shares means AMD's shareholders would get diluted by around 9%, based on AMD's current number of shares outstanding. So, this isn't the same as Nvidia's $100 billion direct investment in OpenAI from a couple weeks ago.

This is a high-stakes, performance-driven, dilutive deal with huge upside only if every milestone is met. Not just that, but the last milestone is AMD stock hitting a whopping $600 a share, which is roughly a 3x from today's prices. And if AMD stock does hit that price, it would imply a $1 trillion valuation, in which case, AMD would be giving OpenAI around $100 billion worth of equity, which works out to 10% of the company.

But even before that, AMD gets a multi-billion dollar hardware order and direct ties to the fastest growing AI company on earth, which means that other AI companies will follow close behind. And since their market cap is currently around $360 billion, this historically huge partnership with OpenAI could make AMD one of the best stocks to get rich without getting lucky, as long as every milestone gets met.

But I think this partnership is also a very important data point for every other ai stock so let's talk about that next and speaking of data you probably know that vpns protect you and your data by encrypting it and changing your digital location but did you know that vpns can also save you some serious money that's where surfshark comes in the sponsor of this video surfshark is a super fast and easy to use vpn that you can install on unlimited devices with just one account to protect yourself and your entire family they also have add-ons like surfshark alert which alerts you if your email credit cards or personal information get leaked in a data breach talk about a total lifesaver also many online stores raise their prices based on your location but with surfshark you can always get the best price on expensive purchases like plane tickets hotel rooms and rental cars so if you care about your family's privacy and you like saving money you can go to surfshark.com ticker to get up to four extra months for free and with surfshark's 30-day money-back guarantee there's no risk in trying it with my link in the description below today alright so far we've covered just how huge this partnership is for amd since they're slated to supply millions of gpus to openai over the next few years we've also covered how amd's 100 billion dollar deal with openai is different compared to nvidia's from a couple weeks earlier now let me tell you something that will put you ahead of every wall street analyst comparing AMD to Nvidia because of this deal The global AI market is currently expected to 19x in size over the next 9 years That would be a compound annual growth rate of 38 through 2034.

And Bloomberg just published an article showing that Meta, Google, Amazon and Microsoft are projected to spend a combined $410 billion in AI CapEx in 2026. That's up from $220 billion in 2024, which is a similar growth rate of 37% per year. I'm providing multiple sources here, since these growth rates are so huge. Remember, 37% is about 3 times faster than the growth of the S&P 500. And I think that might actually be a conservative estimate.

In my last video, I broke down some of the biggest highlights from Jensen Huang's recent appearance on the BG2 pod, a great podcast focused on venture capital and advanced technology. And one point Jensen has been making ever since DeepSeek's big reasoning breakthroughs is that demand for AI inference will go up by 1 billion X. The one that was probably most profound for me was you pounding the table. And you said, inference isn't going to 100x, 1,000x. It's going to 1 billionx.

Which brings us to where we are today. I underestimated. Let me just go on record. I underestimated. That 1 billionx increase in inference demand comes from four big factors that multiplied together. First, ever since OpenAI released ChatGPT in 2022, people have been adopting generative AI even faster than they adopted smartphones after the iPhone came out in 2007 or adopting tablets after the iPad launched in 2010. Second, the amount of tokens per prompt has increased big time.

When ChatGPT first came out, early one-shot question and answer prompts took anywhere from 200 to 1,000 tokens to complete. But today, the same exact prompt can easily cost anywhere from 10 to hundreds of times more tokens as the model reads and analyzes more sources, makes plans and reasons through problems step by step, and writes codes or builds data tables in support of their response. Third, the number of AI use cases is growing well beyond text-based large language models.

In my last video, I shared the slide from a panel that I attended at NVIDIA GTC. It was hosted by the former lead of AI and Materials at Google DeepMind. Each x-axis is time before and after adopting AI, and the y-axis is AI's impact on discovering new materials in Chart 1, the resulting increase in patent filings in Chart 2, and the number of new product prototypes in Chart 3.

Six months after adopting generative AI, material scientists started discovering a record number of new advanced materials. Eight months in, and they were filing a record number of new patents, and by month 17, they were testing a record number of new product prototypes. But that's just for material science. There are charts like this for chip design and lithography, signal processing and communications, gene sequencing and drug discovery, movies, music and video games, you name it.

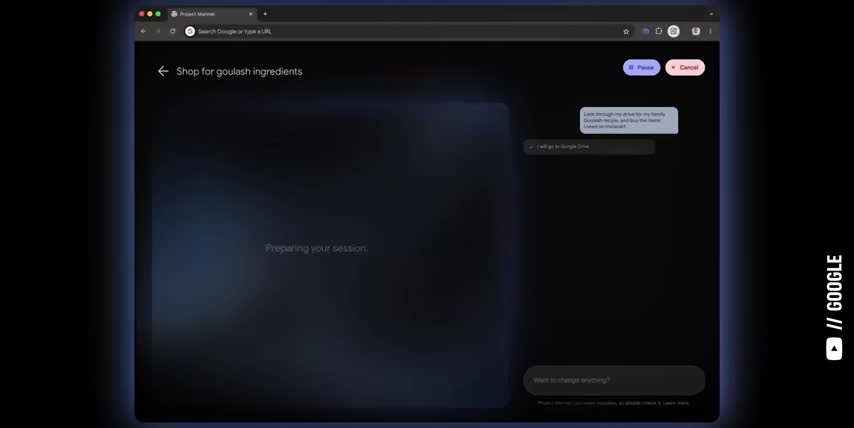

And fourth, it's not just people prompting AI. AI agents can take actions on behalf of users, including spinning up other AIs as part of larger processes. Suppose I were to hire a $100,000 employee and I augmented that $100,000 employee with a $10,000 AI.

And that AI as a result made the employee twice more productive three times more productive when I do it Heartbeat I doing it across every single person in our company right now right And just like most of us have multiple computers today like a work laptop a personal smartphone a smart TV and a tablet it's not crazy to assume that we'll all have multiple AI agents working for us in the future. A work agent, a personal assistant, a content curator, and so on.

all four of these factors, more people, more use cases, more tokens per prompt, and AI agents, have each grown by more than 10x over the last three years, and they all multiply together. So it's not that crazy to think that demand for AI inference will go up by 1 billion x over the next few years. Here's why I'm focusing so much on this 1 billion x number. I started this section by saying that I'd tell you something that will put you ahead of every Wall Street analyst. So here it is.

Conclusion

If the demand for inference will increase by a billion fold over the coming years, there's no way supply will keep up with demand. And if the market is growing much faster than anyone can capture it, then companies like Nvidia and AMD aren't competing at all, at least in the near and medium term. Every GPU that Nvidia makes will get deployed. Every ASIC that Broadcom makes will get deployed. Every accelerator that AMD makes will get deployed.

That's the entire point of this massive partnership with OpenAI. And it's not just chip companies. Every AI infrastructure provider from Amazon, Microsoft, and Google to CoreWeave, Dell, and Oracle are going to see their utilization rates skyrocket as more people with more use cases spend more tokens per prompt and AI agents do the same. This is why I think we're still so early in the AI era.

This is why it's so important to understand the science behind the stocks and this is where I went wrong on AMD and their role in the AI revolution. So let me wrap up this video by admitting my mistake and if you feel I've earned it, consider hitting the like button and subscribing to the channel. That really helps me out and it lets me know to make more content like this. Thanks and with that out of the way, let's talk about what I got wrong on AMD.

Like I just said, demand for AI inference will go up by a billion times over the next few years, which means AMD isn't competing with Nvidia, since the market is growing so much faster than either company can produce their chips. And like I say in every video I make on AMD, they dominate every market where they don't compete with Nvidia. So, I'm sorry I didn't put those two things together before this partnership with OpenAI.

I just couldn't see the forest for the trees, and I promise I'll be more careful in the future. At the end of the day, this deal is a huge validation for AMD, because it means OpenAI believes two things. First, they believe AMD can deliver GPUs five times faster than they do today, and second, they believe that AMD can reach a trillion dollar valuation over the next few years, or else OpenAI would never agree to these terms.

And that means it's still very early for AMD stock, not just because it would have to triple to get to a trillion dollar valuation from here, but because many other AI companies will want to run on the same hardware, software, and development ecosystems as OpenAI. And that makes AMD a great stock to get rich without getting lucky. And if you want to see what other stocks I'm buying to get rich without getting lucky, check out this video next.

Either way, thanks for watching and until next time, this is Ticker Symbol U. My name is Alex, reminding you that the best investment you can make is in you..

Checkout our YouTube Channel

Get the latest videos and industry deep dives as we check out the science behind the stocks.