Table of Contents

1. Introduction

2. Google TPUs

3. Amazon Tranium Chips

4. Competition with Nvidia

5. AI Market Impact

6. Key Takeaways

Introduction

Something big is happening at Google and Amazon. They just launched chips to challenge Nvidia‘s data center dominance, which could spell big trouble for the world's most valuable company, and change the course of the entire AI revolution. Your time is valuable, so let's get right into it. About a week ago, news broke that Meta Platforms was in talks to spend billions of dollars on Google's custom TPU chips.

But instead of rushing out to make a video, I took some time to understand what these chips actually do and what this means for the AI market. Because in my opinion, the single most important question for AI investors is how long Nvidia can dominate data centers with their GPUs and CUDA ecosystem since so much of the AI market is built on top of them today. And I'm glad I waited because Amazon also announced their new Tranium 3 chips a couple days ago, so I'll break those down for you too.

I'm also not here to hold you hostage, So here's exactly what I'll cover in this video. I'll explain what Google and Amazon's chips actually do, how they compete with Nvidia's hardware ecosystem, how these chips actually could change the course of the entire AI revolution but not in the way most investors think, and of course, which AI stocks I'm buying as a result. There's a ton to talk about. So let's start with the story that's on everybody's mind.

About a week ago, Google announced that they would sell their custom Tensor Processing Units or TPUs to other data centers. According to Morgan Stanley, Google has a roadmap to ship a million TPUs to external customers by 2027, which would increase their cloud revenue by over 10%, or close to $13 billion. Google's long-term internal goal is to capture around 10% of NVIDIA's data center revenues over time, which works out to tens of billions of dollars every year.

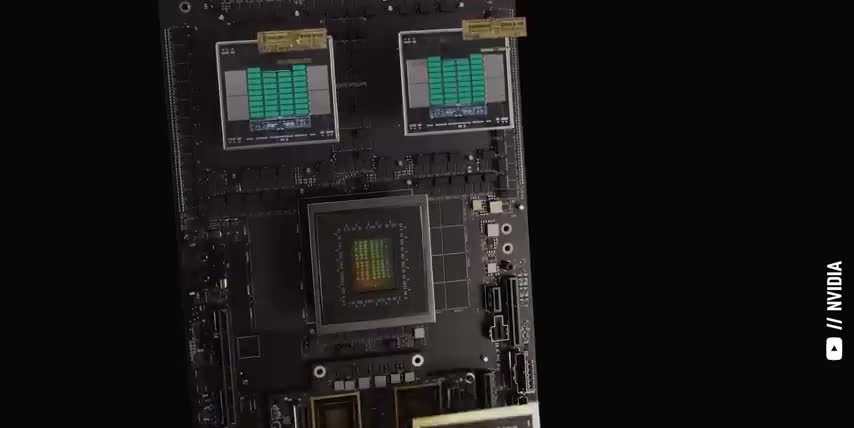

They plan to do that by chipping away at some of NVIDIA's biggest customers and their most widely supported workloads. This is a huge change from Google's previous chip strategy and it's actually much bigger than just competing with Nvidia. Let me show you why. While GPUs are general purpose accelerators that can run almost everything, TPUs or Tensor Processing Units are a different kind of chip called ASICs, application-specific integrated circuits.

Google's TPUs are specifically built for Tensor operations, like matrix multiplication and the related maths that dominate deep learning today. That makes Google's TPUs especially good at three kinds of workloads. First, they're great at high volume inference at massive scales. Google serves billions, if not trillions of requests across very specific services, like search and advertising, maps and shopping, YouTube and Gemini.

So their biggest hardware bottleneck isn't flexibility, it's efficiency. Google's TPUs can outperform at GPUs by anywhere from 50 to 100% per dollar, or per watt, but only for this specific set of applications. Their performance also scales extremely well when thousands of TPUs are connected together for parallel computing thanks to each chip having integrated networking, fast interconnects, and being tightly coupled with memory.

That also makes them great at large training jobs for those same AI models.

And the third kind workload that google's tpus are great at are specialized recommendation and ranking systems since so many of their services involve ranking websites videos products businesses and advertisements based on searches demographics browsing and purchase history and so on google's tpus have custom hardware to accelerate data requests from huge lookup tables and do the highly specialized math involved in ranking the results there are a few key reasons that meta platforms would want to buy these TPUs.

First, both companies have very similar workloads. Google has YouTube, Meta has Instagram, Google has Gemini Meta has Llama and so on And it cheaper to buy Google TPU AI factories than to build their own full solutions from scratch Not just the chips but the racks the liquid cooling optical interconnects, workload schedulers, and software that all need to be designed together.

Also, Meta's training and inference accelerators, also known as the MTIA chips, only support a limited amount of workloads mainly focused on inference, while Google already has pods of 10,000 TPUs training frontier-scale models for search, for video, and for large language models, making them a great way for Meta to catch up on the AI hardware race and diversify their hardware portfolio beyond Nvidia's GPUs.

But there are a few important points that investors should understand about this potential deal.

Meta is spending around $70 billion on AI infrastructure this year alone and their capex budget for 2026 is projected to be close to a hundred billion dollars that's a massive amount of internal demand that their mtia chips can't possibly fill but other hyperscalers don't have this same problem while amazon and microsoft also have massive capex budgets their custom ai chips and internal hardware systems are much more mature than metas so they're much less likely to buy google's tpus instead of just investing in their own already proven technologies.

In fact, Amazon Web Services just launched their new Tranium 3 chip, another ASIC that's focused on extreme power efficiency and cost savings for a few specific AI workloads that they run at extremely high volumes, like training and inference for large language models with huge parameter accounts and context windows, as well as the multimodal and mixture of experts models behind powerful AI agents like Claude.

This chip has 50% more memory capacity, 70% more bandwidth, twice the compute performance and is 40% more energy efficient than Amazon's previous generation. So at first glance, 2026 could be a very tough year for Nvidia. In fact, 2026 is right around the corner. And while everyone is making resolutions they won't keep, you can learn AI before the new year even begins. That's where OutSkill comes in, the sponsor of this video.

A two-day live AI workshop is being hosted to take you from beginner to AI power user.

Over 10 million people around the world have already attended and the slots for this one are filling up faster than ever but for the holidays they're giving the first 1 000 people who sign up with my link a free seat whether you work in tech or finance marketing or retail you'll learn to use some of the most powerful ai tools best practices for prompting and using ai agents and even how to automate workflows without any coding you'll also get free access to outskills online community and learning dashboard for people turning artificial intelligence into real revenues this is a great way to gain a competitive advantage get a serious head start for 2026 and understand the science behind the stocks so make sure to register for your free seat before they run out with my link below today alright so both google and amazon have high performing custom chips that go after the same part of the ai market training and inference for huge high throughput ai models and recommender systems that need to handle billions of consumer requests and now that we understand what these chips do let's talk about how they actually compete with nvidia's hardware ecosystem the truth is they mostly don't outside of those very specific workloads nvidia's gpus power many kinds of ai across a wide variety of industries not just token generation for large language models but image and video generation physics modeling and simulation professional visualization and product design, protein folding and drug discovery, robotic motion and self-driving cars.

The list goes on and on.

And if you've been watching this channel for a while, you know that Nvidia's hardware ecosystem is much bigger than just GPUs In fact it includes something called NVLink Fusion NVLink Fusion is a special chiplet that can be added to other CPUs or other accelerators so they can be installed in Blackwell compute trays or so that Blackwell GPUs and networking solutions can be used in data centers that are already invested in other chips like ARM-based CPUs or more application-specific accelerators.

So while Google's TPUs might be more powerful for specific workloads, they're also more closed, forcing data centers to rely on Google's hardware and software stack as is. And Google's stack is nowhere near as versatile or as widely adopted as CUDA. That's why Google's long-term goal is to capture only around 10% of Nvidia's GPU market with their TPUs, like I mentioned earlier.

And Amazon's total addressable market is even smaller, since they're keeping their Tranium 3 chips in-house, which means companies will have to run their workloads on AWS if they want to use these chips. On the flip side, NVIDIA has millions of GPUs in almost every AI data center on Earth, from AWS and Google Cloud, to Microsoft Azure and Meta Platforms' AI superclusters.

And now that we understand how Google's and Amazon's chips compete with NVIDIA's hardware ecosystem, let's talk about what this all means for the AI revolution, and which stocks are worth buying as a result. And if you feel I've earned it, consider hitting the like button and subscribing to the channel. That really helps me out and it lets me know to make more content like this. Thanks and with that out of the way, let's talk about how these chips impact the overall AI market.

First, the AI market is projected to almost 19x in size over the next 9 years, which would be a compound annual growth rate of over 38% through 2034. That's almost 3 times faster than the average growth rate of the S&P 500.

So even if Google does take 10% of Nvidia's market share over time, that market is growing much faster than either of them can fill the demand alone but that growth is also spread across very different areas of ai like natural language processing computer vision autonomy and robotics nvidia's gpus are flexible enough to support all of these different segments while google's tpus and amazon's tranium chips focus on machine learning and natural language processing so while google and amazon might challenge nvidia's pricing power and their margins with ai labs and ai data centers focused only on language models like openai and anthropic they can't really compete once robots sensors physical motion or digital simulation enter the equation and don't forget the biggest ai labs and data centers won't ever go all in on one ecosystem anyway since they don't want to be dependent on a single vendor and their clients want access to the best chips at the best prices which change depending on the workload that's why every hyperscaler has their own ai chips in the first place.

Now let me say the quiet part out loud. The biggest loser in this situation is AMD. Since their entire data center strategy is being the cheaper, better bang for the buck alternative to Nvidia. Especially for large language model inference, which is exactly what Google's and Amazon's new chips are designed to do.

Google's TPUs will compete directly with AMD for cost optimized inference performance and for some specific training workloads in Google Cloud, in Meta's data centers and with Anthropic. And Amazon's Tranium 3 chips will lower the need for AMD's GPUs inside AWS. So, as more companies build more application-specific chips, they will reduce Nvidia's pricing power and margins, but they could remove the need for AMD's chips altogether.

Alright, but who are the biggest winners in this situation? The Taiwan Semiconductor Manufacturing Company, ticker symbol TSM, is the only company on Earth capable of making Nvidia's GPUs, Google's TPUs, and Amazon's Tranium chips.

They also manufacture Microsoft custom Maya accelerators and Meta training and inference chips So as their customers start designing more specialized chips for different kinds of workloads there will be even more demand for TSMC most advanced and most profitable chip production nodes On top of that more specialized chips require more advanced packaging techniques to get the processors, the memory, and the networking components close enough together and connected at ultra-high bandwidths.

Not only is TSMC the market leader by far when it comes to advanced packaging, it's also the main driver for their margins. So long story short, the more demand there is for different kinds of AI chips, the more TSMC can charge for their limited supply. Which makes TSM a great stock regardless of who wins between Nvidia or Google, Amazon or AMD. But we can't talk about custom chips without talking about Broadcom, ticker symbol AVGO.

Broadcom helped design multiple generations of google's tpus metas training and inference accelerators and bytedance's custom chips that help power tick tock and just a few weeks ago broadcom announced a massive partnership with openai to design their xpus which are custom processors optimized to power chat gpt gpt5 and openai's future models but regardless of which accelerator wins the ai era those chips need to be connected with ultra high speed networks which is another area where broadcom competes directly with Nvidia.

In fact, Broadcom has a 90% market share in Ethernet switching chips for data centers, which is just as huge as Nvidia's share of the data center GPU market. Around 30% of AI workloads run on Ethernet today, and that number is actually growing since the overwhelming majority of the world's data centers already run on Ethernet. That's why Nvidia also offers Ethernet-based networking products instead of forcing data centers to switch to InfiniBand, which they own.

So, by holding both Broadcom and Nvidia stock, investors are holding the two companies selling networking solutions to almost every AI data center and supercomputer in the world. But the whole reason that Google and Amazon even bother designing their own custom chips is for power efficiency. That's because electricity accounts for around one-third of a data center's ongoing operating expenses, and cooling accounts for about 40% of that.

That means both power and cooling have a huge impact on the profit margins for AI, which is why the third stock on my list is vertive holdings ticker symbol vrt vertive holdings makes power and cooling systems for data centers for example they make liquid cooling systems specifically for high density servers and massive gpu clusters used for ai training and inference vertives liquid cooling systems are modular in design and they can be scaled up to cool 600 kilowatts worth of server racks per unit that means that one of these systems can cool five 120 kilowatt blackwell racks without a data center needing to overhaul its pre-existing infrastructure as a result vertive supplies cooling solutions to all three cloud infrastructure providers aws google cloud and microsoft azure vertive also supplies them with core power systems like their libra e-xl which is a high capacity uninterruptible power supply designed specifically for hyperscale and cloud facilities and it supplies huge amounts of energy at very high efficiencies so hopefully this video helped you understand what Google and Amazon's custom chips do, and their overall place in the AI revolution, competing with Nvidia on specific kinds of workloads, but threatening AMD's position as a cost-effective alternative in the process.

Key Takeaways

Key Takeaways

The key takeaways from this article are:

- Google and Amazon have launched custom chips to challenge Nvidia's data center dominance.

- These chips are designed for specific workloads and have the potential to change the course of the AI revolution.

- Nvidia's hardware ecosystem is much bigger than just GPUs and includes NVLink Fusion.

- Google's TPUs and Amazon's Tranium chips focus on machine learning and natural language processing.

- The biggest loser in this situation is likely to be AMD, as Google's TPUs and Amazon's Tranium chips compete directly with AMD for cost-optimized inference performance.

- The biggest winners in this situation are likely to be TSMC, Broadcom, and Vertiv, as they provide essential components and services for AI data centers.

And of course, three stocks set to win big, regardless of which AI chip actually comes out on top, making them all a great way to get rich, without getting lucky. And if you want to see even more stocks that I'm buying to get rich without getting lucky, check out this video next. Either way, thanks for watching and until next time, This is ticker symbol you. My name is Alex reminding you that the best investment you can make is in you.

Checkout our YouTube Channel

Get the latest videos and industry deep dives as we check out the science behind the stocks.