Table of Contents

1. Introduction

2. NVIDIA Hardware Ecosystem

3. NVIDIA Partnerships

4. Quantum Computing and Robotics

5. Key Takeaways

A few days ago, NVIDIA CEO Jensen Huang went to Washington DC to talk about the AI revolution. But what I thought would be a pretty cookie-cutter keynote ended up being over two hours of Jensen dropping non-stop bombshells and revealing NVIDIA's entire AI playbook. So in this post, I'll share the most important takeaways for investors, because what Jensen just announced will make a lot of people very rich, including you and me.

Your time is valuable, so let's get right into it first things first i'm not here to waste your time so here's what i'll be covering up front nvidia's insane new rubin gpus their huge new partnerships with companies like palantir crowdstrike and uber jensen's game-changing announcements for robotics and quantum computing and of course what all this means for ai stocks as a result but let me start with something that will put you ahead of almost every wall street analyst trying to make money on nvidia and the AI revolution.

NVIDIA Hardware Ecosystem

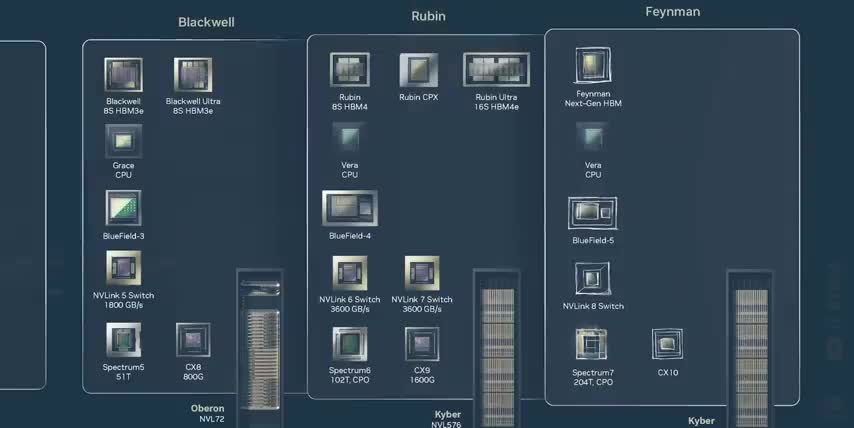

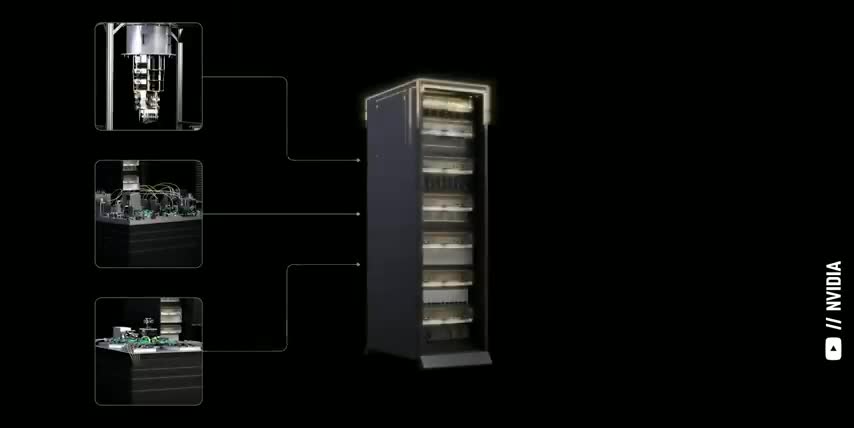

When Nvidia announces a new architecture like Blackwell or Rubin, they're not just talking about a new GPU. They're actually announcing at least six different chips that all work together. Every year, they co-design a new GPU, a new CPU, a new data processing unit or DPU, updated NVLink switch chips to connect GPUs inside a single rack, as well as InfiniBand and Spectrum X Ethernet chips that connect multiple racks together.

And just like different robots in a car factory work together to produce cars as efficiently as possible, all these different chips in an AI factory work together to generate AI tokens as efficiently as possible. So let's walk through that next, and I'll show you how Nvidia's new Rubin GPUs are changing the game. Nvidia's Blackwell and Blackwell Ultra chips are actually two GPU dies connected by an ultra-fast 10TB per second link. That way, they act like one giant GPU.

Nvidia did this because of the technology to put 200 billion transistors on a single chip this big simply doesn't exist. That's why the new Rubin GPU that Jensen just showed off during his keynote also has this two-die design. But the Rubin Ultra, which comes out in 2027, takes this trick to the next level by connecting four GPU dies instead of two. So now, Nvidia has two different ways to scale performance faster than Moore's law.

They can make more powerful GPU dies and they can connect more GPU dies together. The next level up is the Grace Blackwell GB200 Superchip, which connects two Blackwell GPUs and one Grace CPU. The GB300 Superchip does the same thing, except with Blackwell Ultra GPUs and when Nvidia starts shipping Vera Rubin in 2026, the Vera CPU will replace Grace and the Rubin GPUs will replace the Blackwells.

But either way, the two GPUs and one CPU are connected by NVLink, which is a chip-to-chip connection with a bandwidth of 900 gigabytes per second. That's fast enough to move 150 4K movies between these chips every single second. Regardless of the architecture, two of these superchips go into one compute tray, and they're also connected by NVLink. Remember, Nvidia co-designs all of these chips together.

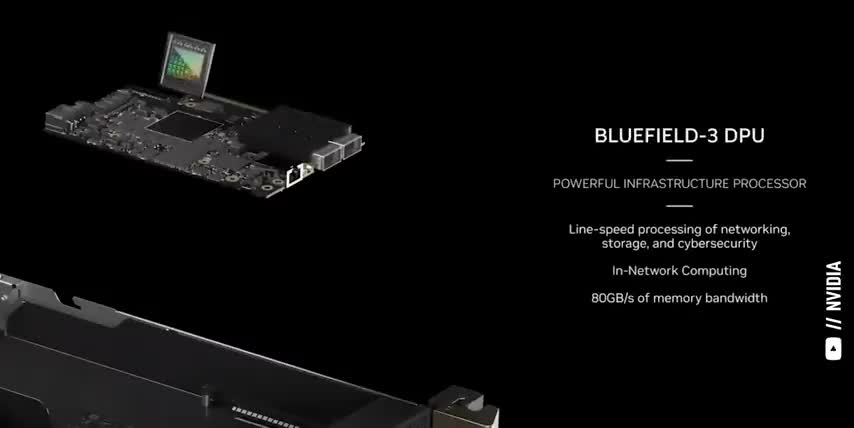

Today's Blackwell systems use 5th generation NVLink switch chips, but the 6th gen NVLink chips will double in speed next year and the seventh generation will double the number of ports to support rubin ultra's four gpu design in 2027 but there's more than just gpus and cpus nvidia also makes a dpu or data processing unit called bluefield dpus handle critical networking storage and security tasks so that the cpus and gpus can focus on generating tokens for example DPUs manage data movement between different compute trays storage devices and racks as well as doing data encryption and decryption enforcing firewalls and turning off trays whenever a chip fails 18 of these compute trays go into one GB200 NVL72 system.

And from now on, that shouldn't sound like alphabet soup to you. There are 2 Blackwell GPUs per superchip, 2 superchips per compute tray, and 18 compute trays per rack. 2x2x18 is 72 Blackwell GPUs, and since they're all connected by NVLink, that's why the whole system is called the GB200 Nvl72 for Grace Blackwell and the GB300 Nvl72 for Blackwell Ultra. Alright, we just went over the compute side of Nvidia's AI infrastructure. The GPUs, CPUs, and DPUs powering the entire AI revolution.

Now let's quickly cover the networking side that connects all these chips together. In between these 18 compute trays are 9 NVLink switch trays. Each tray has 2 NVLink chips and each chip currently connects 4 GPUs. So, 4 ports per NVLink chip times 2 chips per tray times 9 trays again gives us 72 ports that connect every GPU together so that they can act like one giant GPU. There are a few special things that investors need to know about NVLink.

First, these chips enable direct data transfers between GPUs, even across different trays, at rates much faster than traditional PCIe slots like the ones you find in your computer.

Second, they let GPUs access each other's memory, effectively making one large shared memory pool, which is how the whole AI data center can act like one giant gpu and third nvidia has something called nvlink fusion which is a special chiplet that other companies can add to their own chips to integrate them into the compute trays we just went over that means companies like amazon google microsoft meta platforms and even amd can now use their own chips inside of nvidia's broader hardware ecosystem but nvlink fusion could also be how nvidia will expand their ecosystem into new markets like quantum computing and robotics, which I'll talk about in just a few minutes.

Either way, now you know that the real magic is in how all these chips come together to make one rack in an AI factory, while most Wall Street analysts just focus on NVIDIA's GPUs because they happen to be making all the headlines. But the headlines you don't see can still move the market. And that's where Ground News comes in. Ground News analyzes over 60,000 articles a day and rates each news source for political bias and factuality.

For example, check out this story about OpenAI partnering with Walmart, with almost twice as much coverage from the left versus the right. While most of these news sources have a high factuality rating, they also show some interesting bias. Headlines from the left focus on Walmart's valuation and shopping with Chad GPT, while headlines on the right focus on what this partnership means for OpenAI. And their blind spot feed shows me which stories are being ignored by one side or the other.

Because knowing what isn't being talked about is just as important as what is. These features help me keep my facts straight and save me a ton of time. And right now, Ground News is giving my audience 40% off their vantage plan. That's their biggest discount yet. So go to ground.news.tsy or click my link in the description to get unlimited access to every Ground News feature for just $5 a month. That's a no-brainer for any serious investor.

NVIDIA Partnerships

Alright all the chips we covered so far the Blackwell and Rubin GPUs the Grace and Vera CPUs the Bluefield DPUs and the EnvyLink Switch chips are how NVIDIA scales up the amount of compute power that they can fit into a single rack Each rack also has a network tray at the top for scale out Scaling out just means connecting multiple racks together so they can all act like one giant GPU For example NVIDIA SuperPods connect up to 32 racks using either InfiniBand or Spectrum X Ethernet, depending on what the specific data center is already using.

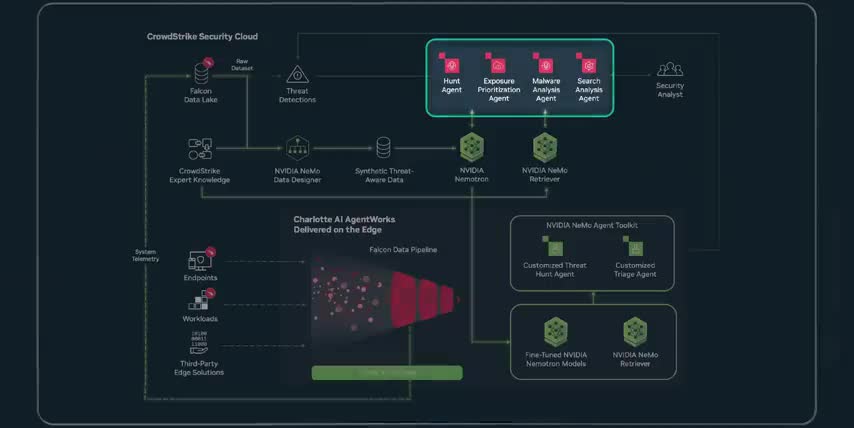

And scaling across just means connecting multiple superclusters or even multiple data centers together, which is how projects like Colossus are connecting hundreds of thousands of gpus and now that we understand how all these different lego pieces fit together we can talk about all the different things that they can do and all the markets they can support let's start with nvidia's new partnerships with crowdstrike and palantir crowdstrike is a cyber security company focused on endpoint detection and response which means securing a company's desktops laptops smartphones and any other device that connects to their specific networks.

CrowdStrike has a platform called Falcon, with a library of cloud-based modules to do things like run antivirus scans, manage firewalls, detect malware, lockdown threats, and so on.

CrowdStrike is going to use Nvidia's hardware stack and the CUDA acceleration libraries built on top of it to power a wide variety of custom cybersecurity AI models and AI agents, to do things like scan devices and workloads for different kinds of threats, and then kick off the right processes and cybersecurity workloads depending on what they find, from standard data logging and network checks all the way to isolating machines when a threat is actually detected.

On the flip side, Palantir makes AI-powered platforms to give enterprises a real-time view of their data, using something called ontologies, which are basically network graphs to show a company's assets, their attributes, and their relationships. Once a company has this single source of truth for all of their data, they can then use Palantir's platforms and tools to build models, workflows, and automations to run on top of it.

Palantir is partnering with NVIDIA to use their AI models and automations as part of their larger workflows, since NVIDIA's hardware and software ecosystem is already in every cloud service that Palantir already runs on. Through this partnership, Palantir gets to more tightly integrate their AI platforms with the hardware that accelerates them.

And NVIDIA gets insights into the kinds of processes and automations that the biggest enterprises in the world are running today, so that they can build better CUDA libraries and tools for them in the future. Talk about a huge win-win situation for two of the biggest winners of the AI era. Jensen actually announced 10 massive partnerships like these during his keynote presentation.

And what they all have in common is using NVIDIA's hardware, software, acceleration libraries, tools, and AI software in all of these companies' core business workflows. But the AI revolution isn't just about accelerating existing companies and technologies. It's also about powering new ones like quantum computing and robotics. And if you feel I've earned it, consider hitting the like button and subscribing to the channel.

That really helps me out and it lets me know to make more content like this. Thanks and with that out of the way, let's talk about quantum computing and robotics. The most important thing for investors to understand about quantum computing is that it won't replace the computers we already have today. It will complement and enhance them. Just like we already have CPUs, GPUs, and DPUs, we'll also have QPUs or quantum processing units that work within a larger hardware ecosystem.

And just like I showed you NVLink Fusion, which lets any kind of CPU or GPU work within in NVIDIA‘s stack, Jensen just announced NVQ-Link, a specialized chiplet designed to connect quantum processors and NVIDIA's GPUs.

The goal of NVQ is to let quantum processors handle the kinds of workloads that they best at certain kinds of optimization problems cryptography complex statistics and simulations while GPUs handle the system calibration noise reduction error correction and coprocessing so that the QPUs don't have to.

And make no mistake, the announcement of NVQ-Link is just as good for quantum computing companies as it is for Nvidia, since quantum processors will now have a place in the most valuable and widespread computing stack on the planet. If you take away one thing from this video, let it be that NVLink, NVLink Fusion, and NVQLink are the kinds of technologies that put Nvidia in every data center on the planet, regardless of the chips or the networks that they run on.

Speaking of which, Jensen made another announcement that surprised me even more than NVQLink, and that's Nvidia's new partnership with Nokia. Nvidia and Nokia are teaming up to build the next generation of AI-powered wireless networks for 6G. The goal is to build smarter, faster, software-defined 6G base stations that use AI to manage, optimize, and secure the flow of data between devices, cell towers, and data centers.

This opens up a multi- hundred billion dollar growth market as the telecom industry goes through the same upgrade cycle that we just saw with data centers, ripping out legacy equipment and replacing it with advanced hardware to support 6G and AI workloads. The goal here isn't just faster music downloads and social media posts. It's to build a wireless cloud so that robots and self-driving cars aren't limited by current cellular speeds.

Remember, compute power isn't the bottleneck for most factory robots or robo-taxis, where adding a little weight isn't a big issue. It's connectivity, like the limited signal strength on long stretches of roads between cities or in industrial areas with lots of metal and interference.

If Nvidia's hardware and software stack can upgrade and optimize the next generation of wireless communications, which currently takes up 1-2% of the world's entire power, then they would extend their dominance from data centers into edge AI, industrial robotics, and self-driving cars. That's why NVIDIA's new partnership with Uber is also such a big deal. NVIDIA and Uber are partnering to deploy one of the world's largest networks of robo-taxis and autonomous delivery vehicles.

Their goal is to scale up to 100,000 level 4 autonomous vehicles that will use NVIDIA's Drive Hyperion platform in the cars and NVIDIA's Cosmos and Omniverse software to process, curate, train, and distill large amounts of driving data. The thing I love about NVIDIA's strategy as an investor is that they're sticking to what they do best and they're letting their partners do the same.

NVIDIA brings the AI chips and software, while companies like Mercedes-Benz, BYD, Lucid, and Rivian focus on the cars and Uber runs the fleet, just like with Nokia and their wireless space stations for 6G networks, and just like with Palantir and Crownstrike with their own enterprise software.

So hopefully this video helped you understand some of the biggest announcements Jensen made during his latest keynote in Washington DC, including Nvidia's new Rubin GPUs, their overall hardware ecosystem for AI data centers, and how that ecosystem will expand into new markets like quantum computing, wireless communication, robotics, and self-driving cars. This is why they have more than half a trillion dollars in revenue booked from now through 2026.

This is why Nvidia became the world's first 5 trillion dollar company, just like I said they would 3 years ago. This is why understanding the science behind the stocks is the best way to get rich without getting lucky. And if you want to see what other stocks I'm buying to get rich without getting lucky, check out this video next. Either way, thanks for watching and until next time, this is Ticker Symbol U. My name is Alex, reminding you that the best investment you can make is in you..

Key Takeaways

The key takeaways from Jensen's keynote are:

- NVIDIA's new Rubin GPUs will change the game for AI computing

- NVIDIA's hardware ecosystem is expanding into new markets like quantum computing, wireless communication, robotics, and self-driving cars

- NVIDIA's partnerships with companies like Palantir, CrowdStrike, and Uber will drive growth and innovation in these new markets

- NVIDIA's NVLink, NVLink Fusion, and NVQLink technologies will enable the company to dominate the data center and edge AI markets

- NVIDIA's strategy of sticking to what they do best and letting their partners do the same will drive success in the AI era

Checkout our YouTube Channel

Get the latest videos and industry deep dives as we check out the science behind the stocks.