I just got back from Computex and I'm convinced that Wall Street does not understand NVIDIA. That's because the key to great investments is understanding a company's products, not just their profits. And after everything I saw in Taiwan and on their latest earnings call, I'm convinced that NVIDIA will be the world's first $5 trillion company. Let me show you why. Your time is valuable, so let's get right into it.

Table of Contents

1. NVIDIA Earnings

2. NVLink Fusion and Dynamo

3. NVIDIA Stock: Short, Medium, and Long Term

If you've been watching this channel for a while, you know that I believe in boots-on-the-ground research. The best way to understand a company's products is to try them, to talk to the developers behind them, and to see how partners, customers, and even their competitors are using them.

And the more I go to these conferences and make videos organizing my thoughts, the more I realize that Wall Street analysts still don't understand companies like Nvidia or Palantir or the long-term implications of generative AI. So, I went to two conferences in Taiwan, Computex 2025 and NVIDIA GTC Taipei. And there were tons of companies working on every aspect of AI, from self-driving cars and supercomputers to robots and digital agents.

So I'll split all my investing insights across two videos. My next one will cover all things Computex, and this video will be all about NVIDIA, including their latest earnings call, the Trump administration forcing NVIDIA out of China, the breakthrough networking technologies that Jensen just revealed in Taiwan, and of course, what all this means for Nvidia stock over the short, medium and long term. There's a ton to talk about, but let's start with Nvidia's earnings first.

Nvidia reported record revenues of $44.1 billion for the quarter, which is up 12% quarter over quarter and a whopping 69% year over year. Nvidia also posted earnings per share of $0.81, which is up 33% year over year, but actually down 9% quarter over quarter. And I'll explain why in a second. NVIDIA's biggest business unit by far is data centers, accounting for 89% of their total revenues today.

Data center revenues came in at $39 billion for the quarter, which is up 10% from last quarter and up 73% year over year.

Just to put that in perspective, Amazon Web Services powers roughly one-third of the entire internet and aws reported 29 billion dollars in revenue last quarter so nvidia's data center revenues coming in at 39 billion dollars shows just how huge the global ai infrastructure buildout is getting one thing i realized after talking to many companies in taiwan is that nvidia is not a data center company they're an ai infrastructure company and thinking about them that way changes how I see their tech stack and how I track their growth.

For example, the Stargate projects in the US and UAE are actually huge AI infrastructure projects that are designed to support national AI research and deployment. NVIDIA has many quote-unquote sovereign AI projects like this around the world, which means deploying AI factories that are powering models designed and fine-tuned for specific countries, cultures, languages, and laws.

And just like we can measure a country's total electricity generation in terawatt hours per year, I think we'll soon be looking at total token generation the exact same way.

But if that's true, and Nvidia is the world's premier AI infrastructure company, then why are their earnings down by 9% quarter over quarter? Well, on April 9th, the US government informed Nvidia that they'd need a license to export their Hopper H20 chips to China effectively stopping them from providing infrastructure to one one of the biggest AI markets on the planet These new export controls were one of the biggest focus points of the entire earnings call because Nvidia ended up taking a $4.5 billion write-down for the chips they couldn't sell to China.

In my opinion, the Trump administration's restrictions on AI chip sales to China hurt us way more than they help. Why? China is one of the world's largest AI markets, valued at over $50 billion today, and half of all AI researchers on Earth come from China. Companies like Huawei, Baidu, Alibaba, and Deepseek prove that China's AI industry will keep improving with or without U.S. chips, and limiting their access only makes them more creative.

So the real policy question should be whether one of the world's largest AI markets should run on American chips or on hardware from our rivals. And this could end up being a trillion dollar question, especially as we start scaling even more advanced technologies on top of AI, like 6G networks and quantum computing. So, Nvidia took a $4.5 billion write down on the H20 chips that they can no longer sell to China, which dropped their gross margins to 61% and their earnings per share to 81 cents.

Without that write down, Nvidia's gross margins would have been 71.3% and their earnings per share would have been 96 cents, much higher than what they ended up reporting. 71% gross margins means Nvidia is as profitable as most software companies.

Checkout our YouTube Channel

Get the latest videos and industry deep dives as we check out the science behind the stocks.

NVLink Fusion and Dynamo

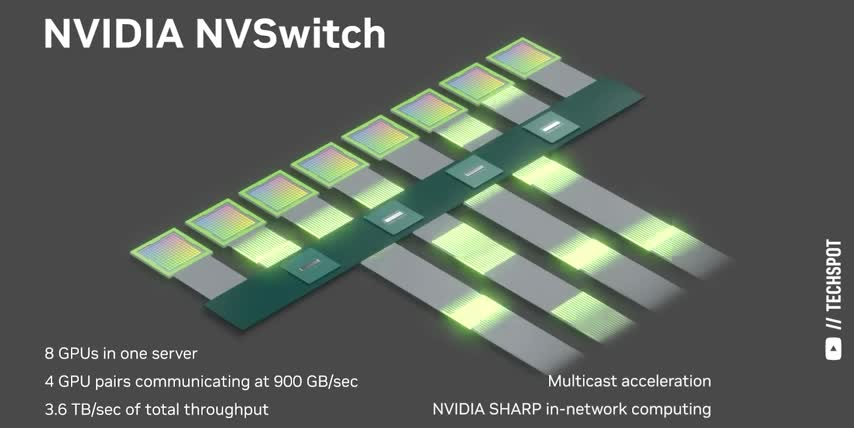

But while I was in Taiwan, Jensen announced a breakthrough piece of networking technology that changes everything when it comes to AI infrastructure. NVLink Fusion is so that you can build semi-custom AI infrastructure, not just semi-custom chips.

It's called NVLink Fusion, and it allows NVIDIA's proprietary NVLink interconnects to work with non-NVIDIA processors, like Intel's CPUs, AMD's GPUs, and Broadcom's custom ASICs, Essentially, fusing those third-party chips into NVIDIA's high-speed networks by using a special pre-verified NVLink chiplet. That lets NVIDIA sell their networking technologies to data centers and get on the upgrade path for individual compute clusters that are already heavily invested in non-NVIDIA chips.

For example, Google, Amazon, and Microsoft can use these NVLink chiplets to combine their own custom chips with NVIDIA's GPUs.

That way, they can create tailored AI solutions for a wide variety of use cases instead of having to choose one kind of chip over the other chip makers like qualcomm intel and amd can now use nvidia's interconnects instead of developing their own scale-up networking solutions and for nvidia envi link fusion is a real one-two punch one it becomes the perfect first product to put in every compute cluster and then nvidia can expand their footprint from there, as clients and workloads continue to evolve over time.

And two, it's a hedge against a future where companies reduce their reliance on expensive GPUs altogether in favor of custom application-specific chips. While I was in Taiwan, I got to talk directly to Jensen, so I asked him how factories using NVLink Fusion would decide which chips to send specific workloads to. Like, say you had a large network of Nvidia's Blackwell Ultras and Google TPUs.

How does the data center coordinate all the parallel processing between these two different kinds of chips? That's when Jensen told me about Dynamo, NVIDIA's open source inference software designed for deploying generative AI and reasoning models across distributed environments. Dynamo was actually announced at NVIDIA GTC back in March, but it seemingly flew under the radar, kind of like NVLink Fusion did in May.

Dynamo optimizes chip utilization, data caching, and workload routing to get the most tokens out of large-scale AI factories. Remember, more tokens means more revenue. And because Dynamo is a fully open-source framework, developers can integrate it into different AI workflows, AI models, and even AI chips connected via NVLink Fusion. So, if we think of NVLink as an AI factory's nervous system, then Dynamo would be its brain.

And innovations like these are why I think of Nvidia as a global AI infrastructure company. And now we've come full circle. Because even though the Trump administration's export controls are stopping Nvidia from selling their infrastructure to China's $50 billion AI market, Dynamo and NvLink Fusion open up new markets for Nvidia right here in the US. Mainly every data center and high performance compute cluster that was already too invested in non-NVIDIA processors to use their ecosystem.

NVIDIA Stock: Short, Medium, and Long Term

There's no doubt that being forced to stop selling chips to China is a net negative for Nvidia and probably the entire world. Nvidia's data center revenues were $39 billion this past quarter, so an $8 billion write-down in quarter 2 is going to be a sizable piece of the pie. But even with this headwind, Nvidia's data center revenues grew by 73% year over year. That's not bad for one of the biggest companies on the planet. Nvidia also has plenty of catalysts in the near future.

First GTC Paris is right around the corner, which will be another massive Nvidia developer conference where I'm expecting even more AI announcements. And just like GTC in March, and GTC Taipei in May, I'll be there live to cover Jensen's keynote, check out Nvidia's newest products and prototypes, and keep learning as much as I can about the science behind this stock. GTC Paris will be part of France's Viva Technology conference, kind of like how GTC Taipei was part of Computex.

I'll leave some links in the description in case you want to check them out or at least put the major keynotes on your calendar. I expect Jensen's Keynote to have updates on all the new networking technologies that I've covered in my last few videos on NVIDIA, like their co-packaged Optics and NVLink Fusion, as well as the B300 Blackwell Ultras, which are set to ship in the second half of 2025.

Remember, Blackwell is an accelerated computing platform, so we should be on the lookout for new information on the GPUs, the Grace CPUs, the Bluefield DPUs, NVLink switchchips, InfiniBand and Spectrum X networking solutions. And it's not just about data centers. I spent a lot of time in Taiwan understanding the different kinds of capabilities that Blackwell enables at the edge.

I went around the entire show floor and I got access to a few private demos that showcased a few new AI workloads that totally blew my mind. I also interviewed a couple more senior leaders, all of which I'll be sharing with you over the next few weeks. And while I'm in France, I'm also hoping to learn more about the architectures that come after Blackwell. Rubin, Rubin Ultra, and Feynman. Like I've said before, it's not just about the next three chips.

It's also about the overall performance gains between them. The bigger the boost each architecture provides to data-centered token generation, the faster AI costs come down, which means more AI adoption across the board. that would obviously be great for Nvidia stock over the medium and long term. So Nvidia's earnings call was awesome even with the $4.5 billion write down.

They announced key technologies like Dynamo and NVLink Fusion to expand their total addressable market into previously untapped data centers. And there's one more conference where we could learn even more about all the architectures further down their roadmap. So with all that, I still think Nvidia will be the first company to hit $5 trillion in market cap. So my plan is to keep dollar cost averaging in over the long term, just like I have been for the last 9 years.

And if you want to see what other stocks I'm buying, make sure to check out this video next. Thanks for watching to the end even though I gave you everything up front. And until next time, this is TickerSymbol: YOU. My name is Alex, reminding you that the best investment you can make is in you. Thank you.

Key Takeaways

NVIDIA's latest earnings call revealed a record revenue of $44.1 billion, with data center revenues accounting for 89% of their total revenues. The company's earnings per share were up 33% year over year, but down 9% quarter over quarter due to a $4.5 billion write-down for unsold H20 chips in China.

NVIDIA's NVLink Fusion technology allows for semi-custom AI infrastructure, enabling the company to expand its total addressable market into previously untapped data centers. The company's Dynamo open-source inference software optimizes chip utilization, data caching, and workload routing for large-scale AI factories.

NVIDIA's stock is expected to be impacted by the Trump administration's export controls, but the company has plenty of catalysts in the near future, including the upcoming GTC Paris conference. The company's long-term prospects look promising, with a potential market cap of $5 trillion.